Measuring the Size of the World

28 November 2020

Saturday

A Scientific Research Program That Never Happened

Carl Sagan liked to characterize the Library of Alexandria as a kind of scientific research institution in classical antiquity:

“Here was a community of scholars, exploring physics, literature, medicine, astronomy, geography, philosophy, mathematics, biology, and engineering. Science and scholarship had come of age. Genius flourished there. The Alexandrian Library is where we humans first collected, seriously and systematically, the knowledge of the world.”

Carl Sagan, Cosmos, 1980, pp. 18-19

Clearly, before the scientific revolution there were many intimations of modern science, but these intimations of modern science, these examples we have of scientific knowledge prior to the scientific revolution, are isolated, and almost always the work of a single individual, like Archimedes or Eratosthenes. Thus Sagan used the example of the Library at Alexandria to suggest that there was, in classical antiquity, at least an intimation of a research institutions in which scholars worked in collaboration with each other. How accurate of a picture is this of science in antiquity?

The philosopher of science Imre Lakatos used the phrase “scientific research program” to refer to a number of scholars working jointly on a common set of problems in science. These scholars need not know each other, or work together in the same geographical location, but they do need to know each other’s work in order to respond to it, to question it, to elaborate upon it, and to thus contribute together to the growth of scientific knowledge, not as an isolated scientist producing an isolated result, but as a community of scholars with shared methods, shared assumptions, and shared research goals. Did any scientific research programs in this sense exist in classical antiquity? And was the Library at Alexandria a focal point for ancient scientific research programs?

If there were scientific research programs in antiquity, I am unaware of any evidence for this. No doubt at some humble scale shared scientific inquiry did take place in classical antiquity, but no surviving accounts of research undertaken in this way describe anything like this. In Athens, and later at Rome and Constantinople, the ancient philosophical schools did exactly this, investigating common research problems in a collegial atmosphere of shared research findings, and since there was, in classical antiquity, no distinction made between science and philosophy, we can assert with confidence that scientific research institutions (Plato’s Academy and Aristotle’s Lyceum) existed in antiquity, and scientific research programs existed in antiquity (Platonism, Aristotelianism, etc.), but even as we assert this we know that this was not science as we know it today.

When universities were founded in the Middle Ages, these universities inherited the tradition of philosophical inquiry that the ancient schools had once cultivated, and they added theology and logic to the curriculum. If there is any research program in a recognizable science that extends through western history all the way to its roots in classical antiquity, it is the research program in logic, which we can find in antiquity, in the Middle Ages, and in the modern period still today. But the Scholasticism that dominated medieval European universities was, again, nothing like the science we know today. Scholars did collaborate on a large, even a multi-generational research program, in Aristotelianism and Christian theology, and in the late Middle Ages this began to approximate natural science as we have known it since the scientific revolution, but when the scientific revolution began in earnest, it often began as a rejection of the universities and Scholasticism, and the great thinkers of the scientific revolution distanced themselves from the tradition in which they themselves were educated.

One could even say that Galileo worked essentially in isolation, when, during the final years of his life, living under house arrest due to the findings of the Inquisition, he pursued his research into the laws of motion in his own home, building his own experimental apparatus, writing up his own results, and being segregated from wider society by his sentence. What is different in the case of Galileo however, what places Galileo in the context of the scientific revolution, rather than being simply another isolated scholar like Archimedes or Eratosthenes, perhaps with a small circle of intimates with whom they shared their research, is that Galileo’s work was shortly thereafter taken up by many different individuals, some of them working in near isolation like Galileo, while others worked in community (in some cases, in literal religious communities).

Alexander Koyré’s 1952 lecture “An Experiment in Measurement” (collected in Metaphysics and Measurement), details how Filippo Salviati, Marin Mersenne, and Giovanni Battista Riccioli all took up and built upon Galileo’s work. Some of the experiments undertaken by Mersenne and Riccioli demanded an almost heroic commitment to the attempt to make precision observations despite the technical limitations of their experimental apparatus. Riccioli built several pendulums and, with the aid of nine Jesuit assistants, counted every swing of a pendulum over a period of twenty-four hours. The many individuals who were inspired by Galileo to replicate his results, or to try to prove that they could not be replicated, is what made the scientific revolution different from scientific knowledge in earlier history, and this community of scientists working on a common problem is more-or-less what Lakatos meant by a scientific research program.

Since the scientific revolution we have any number of examples of the work of a gifted individual being the inspiration for others to build upon that original work, as in the case of Carl von Linné (better known today as Linnaeus), whose followers were called the Apostles of Linnaeus, and who spread out across the world collecting and classifying botanical specimens according to the binomial nomenclature of Linnaeus. During Linnaeus lifetime, another truly remarkable scientific research program was the French Geodesic Mission, which sent teams to Lapland and Ecuador in order to measure the circumference of the Earth around the equator and around the poles, to see which distance was slightly longer than the other.

While there were, in classical antiquity, curious individuals who traveled widely and who attempted to gather empirical data from many different locations, there was no community of scholars, inside or outside any institution, that, prior to the scientific revolution, engaged in this kind of research. We can imagine, as an historical counter-factual, if other scholars in antiquity had been sufficiently interested in Eratosthenes’ estimate of the size of the Earth that they had sought to replicate Eratosthenes’ work with the same passion and dedication to detail that we saw in the work of Mersenne and Riccioli. Imagine if the Library at Alexandria had conducted Eratosthenes’ experiment not only in Alexandria and Syene, but also had sent teams to the furthest reaches of the ancient world — the great cities of Asia, Europe, and North Africa — and had repeated their investigations with increasing precision with each generation of experiments.

If Eratosthenes’ determination of the circumference of Earth had been the object of such a scientific research program in classical antiquity, there would have been no need of the French Geodesic Mission two thousand years later, as these results would already have been known. And given that Eratosthenes lived during the third century BC, the stable political and social institutions of the ancient world still had hundreds upon hundreds of years to go; the wealth and growth of the ancient world was still all to come in the time of Eratosthenes. There was, in the post-Eratosthenean world, plenty of wealth, plenty of time, and plenty of intelligent individuals who could have followed up upon the work of Eratosthenes as Mersenne and Riccioli followed up on the work of Galileo, and the Apostles of Linnaeus followed up on the first research program of scientific botany. But none of this happened. The scientific knowledge that Eratosthenes formulated was preserved by others and repeated in a few schools, but no one picked up the torch and ran with it.

Why did no Eratosthenean scientific research program appear in classical antiquity? Why did scientific research programs appear during the scientific revolution? I cannot answer these questions, but I will note that these questions could constitute a scientific research program in history, which continues today to be weak in regard to scientific research programs. History today is in a state of development similar to natural science in medieval universities, before the scientific revolution. This suggests further questions. Why was there was scientific research program into logic that has been continuous throughout the history of western civilization (including the Middle Ages, which was particularly brilliant in logical research)? Why has history escaped, so far, the scientific revolution?

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

Addendum on the Elizabethan Conception of Civilization

21 October 2019

Monday

In The Elizabethan Conception of Civilization I examined some of the nomothetic elements of the otherwise idiosyncratic character of Elizabethan civilization. In that post I emphasized that the large-scale political structure of European civilization in the medieval and modern periods entails an ideology of kingship in which the monarch himself or herself becomes the reproducible pattern for his or her subjects to follow.

There is another source of nomothetic stability in the case of idiographic Elizabethan civilization, and that is the long medieval inheritance that was still a living presence in early modern society. The classic exposition of the Elizabethan epistēmē (as perhaps Foucault would have called it) is E. M. W. Tillyard’s book The Elizabethan World Picture, which emphasizes the medieval heritage of Elizabethan society. The elements of the medieval world view that Tillyard rightly finds surviving into the conceptual framework of Elizabethan England could be understood as the invariant and continuous elements that constitute the nomothetic basis of Elizabathan civilization.

Peter Saccio in his lectures Comedy, Tragedy, History: The Live Drama and Vital Truth of William Shakespeare (this was the first set of lectures that I acquired from The Teaching Company, which has since changed their name to The Great Courses, but it was as The Teaching Company that these lectures were first made available) briefly discussed Tillyard’s book and its influence, which he characterized as primarily conservative. Saccio noted that recent Shakespeare scholarship has focused to a much greater extent on the radical interpretations of Shakespeare. As goes for Shakespearean theater, so it goes for Elizabethan society. We could give a conservative Tillyardian exposition of Elizabethan society that portrays that society primarily in terms of its medieval inheritance, or we can give a more radical exposition of Elizabethan society that portrays that society in terms of the rapid changes and innovations in society at this time.

While Elizabethan civilization retained many deeply conservative elements drawn from the medieval past, the underlying theme of Elizabethan civilization — the consolidation of the Anglican Church as a state institution — was in fact among the most radical changes possible to a social structure within the early modern context of civilization, and may be compared to Akhenaten’s attempt to replace traditional Egyptian mythology with a quasi-monotheistic solar cult. But whereas Akhenaten’s religious innovations did not endure, with the kingdom reverting to traditional religious practices after Akhenaten’s death (i.e., the central project of Egyptian civilization survived Akhenaten), the religious innovations of Elizabeth I did endure.

Up until the Enlightenment, almost all civilizations had, as their central project, or integral with their central project, a religion (or, more generally, a spiritual tradition). If we regard the Enlightenment as a secularized ersatz religion (or, if you prefer, a surrogate religion), then this has not changed to the present day. Regardless, changing the religion that is identical with, or is integral to, the central project of one’s civilization, is akin to making changes to the center of the web of belief (to employ a Quinean motif) rather than merely making changes at the outer edges of the web.

The Protestant Reformation in England, then, can be understood as the opening of Pandora’s Box. While retaining the forms of tradition to the extent possible, the establishment of the Anglican Church demonstrated that even the central project of a civilization can be changed out at the whim of a monarch, and this was as much as to demonstrate that everything hereafter was up for grabs. Subsequent history was to bear this out. One might even say that regicide was implicit in the fungibility of early modern England’s central project, but it took a hundred years for that to play out (on civilizational time scales, a hundred years is a reasonable lead time for causality). If you can change your church, why not cashier your king?

Many years ago in the early history of his blog, I wrote some posts about Christopher Hill’s book The World Turned Upside Down: Radical Ideas during the English Revolution (in The Agricultural Paradigm and The World Turned Right-Side Up; cf. also the links embedded in these posts), which book is a somewhat sympathetic history of the radical movements in early modern England represented by groups like the Ranters, the Diggers, the Levelers, and the True Levelers. Hill imagined that a much more radical revolution might have emerged from Elizabethan England and the revolutionary movements that followed. Now, in the spirit of what I wrote above, I can ask whether, if you can change the central project of your civilization, cannot you also go down the path of the kind of radical revolution that Hill imagined, toward communal property, disestablishing the state church, and rejecting the Protestant Ethic?

This question points to something important, I think, but I will not attempt at this time to give an exposition of what all is involved, because it has only just now occurred to me while writing this. While I have come to see the Protestant Reformation as opening Pandora’s Box in England, I think there is also a limit to the amount of revolution that a population can stomach. As wrenching as it is to replace the central project of your civilization, or to execute your king, it would be even more wrenching to attempt to uproot the whole of the ordinary business of life. Certainly you wouldn’t want to attempt to do both at the same time. If I am right about this, how then would be draw a line between the ordinary business of life, that is to remain largely undisturbed, and the extraordinary business of life, in which a population can tolerate violent punctuations and periods of instability?

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

The Victorian Achievement

3 May 2018

Thursday

There is not only an insufficient appreciation of the Victorian achievement in history, but perhaps more importantly there is an insufficient understanding of the Victorian achievement. Victorian civilization — and I will avail myself of this locution understanding that most would allow that the Victorianism was a period in the history of western civilization, but not itself a distinct civilization — achieved nothing less than the orderly transition from agricultural civilization to industrialized civilization. As such, Victorianism became the template for other societies to make this transition without revolutionary violence.

The transition from agriculturalism to industrialism was the most disruptive in the history of civilization, and can only be compared in its impact to the emergence of agricultural civilization from pre-civilized nomadic hunter-gatherers. But whereas the the transition from hunter-gatherer nomadism to settled agriculturalism occurred over thousands of years, the transition from agricultural civilization to industrialized civilization has in some cases occurred within a hundred years (though the transition is still underway on a planetary scale). The Victorians not only managed this transition, and were the first people in history to manage this transition, they moreover managed this transition without catastrophic warfare, without the widespread breakdown of civil order, and with a certain sense of style. It could be argued that, if the Victorians had not managed this transition so well, rather than a new form of civilization taking shape, the industrial revolution might have resulted in the collapse of civilization and a new dark age.

Today we think of Victorianism as a highly repressive social and cultural milieu that was finally cast aside with the innovations of the Edwardian era and then the great scientific and social revolutions that characterized the early twentieth century and which then instituted dramatic social and cultural changes that ever after left the Victorian period in the shadows of history. But Victorian repression was not arbitrary; it served a crucial social function in its time, and it may well have been the only possible social and cultural mechanism that would have made it possible for any society to be the first to make the transition from agriculturalism to industrialism.

Victorianism not only made an orderly transition possible from agriculturalism to industrialism, it also made possible an orderly transition from a social order (i.e., a central project) based on religious tradition to a social order that was largely secular. Americans (especially Americans who don’t travel) are not aware of the degree to which European society is secularized, and much of this occurred in the nineteenth century. Matthew Arnold’s poem Dover Beach was an important early expression of secularization.

Nietzsche already saw this secularization happening in the nineteenth century, and, of course, Darwin, a proper English gentleman, worked his own scientific revolution in the midst of the Victorian period, which played an important role in secularization. Rather than being personally destroyed for his efforts — which is what almost any other society would have done to a man like Darwin — Darwin was buried in Westminster Abbey and treated like a national hero.

Considerable intellectual toughness was a necessary condition of making a peaceful transition from agriculturalism to industrialism, and we can find the requisite toughness in the writers of nineteenth century England. The intellectual honesty of Matthew Arnold is bracing and refreshing. Arnold’s “Sweetness and Light” is a remarkable essay, and not at all how we today would characterize the aspirations of Victorian society, but in comparison to the horrific counterfactual that might have attended the industrial revolution under other circumstances, the grim Victorian world described so movingly by Charles Dickens is relatively benign. Since we have, for the most part, in our collective historical imagination, consigned nineteenth century English literature to our understanding of a genteel and proper Victorianism, it is easy to believe that the men of the nineteenth century did not yet possess the kind of raw, unsparing honesty that the twentieth century forced upon us. Reading Arnold now, in the twenty-first century, I see that this is not true.

Matthew Arnold’s world may have been innocent of World Wars, Holocausts, genocides, nuclear annihilation, and the rigorously realized horrors bequeathed to us by the twentieth century, but it was not an innocent world. History may always reveal new horrors to us, but even in the slightly less horrific past, there were horrors aplenty to preclude any kind of robust innocence on the part of human beings. And it is interesting to reflect that, while the Victorian Era is remembered for its social and cultural repression, it is not remembered for the scope and scale of its atrocities.

This is significant in light of the fact that the twentieth century, which has been seen as a liberation of society once Victorian constraints were swept away, is remembered for the scope and scale of its atrocities. Again, the Victorians did a better job than we did in managing the great transitions of our respective times. Voltaire famously said (during the Enlightenment) that we commit atrocities because we believe absurdities. If this is true, then the absurdities believed by the Victorians were less pernicious than the absurdities believed in the twentieth century.

The late-Victorian or early Edwardian Oscar Wilde in his De Profundis was raw and unsparing, but was rather too self-serving to measure up to the standard of intellectual honesty set by Matthew Arnold. (I fully understand that most of my contemporaries would probably disagree with this judgment.) However, Wilde’s heresies, like Arnold’s honesty, was characterized by a great sense of style. We may criticize the Victorian legal and penal system for essentially destroying Wilde, but it was also the Victorian cultural milieu that made Oscar Wilde possible. If Wilde had not been quite so daring, he might have gotten by without provoking the authorities to respond to him as it did.

Oscar Wilde’s The Soul of Man Under Socialism is a wonderful essay, employing the resources of Wilde’s legendary wit in order to to make a serious point. Like many of Wilde’s famous witticisms, his central motif is the contravention of received wisdom, forcing us to see things in a new perspective. Though we know in hindsight the caustic if not criminal consequences for individualism under socialism, Wilde did not have the benefit of hindsight, and Wilde makes the case that socialism will make authentic individualism possible for the first time. Wilde’s conception of individualism under socialism was in fact a paean to his own individualism, carved out within the limitations of Victorian society. This, too, is an ongoing legacy of Victorianism, which was sufficiently large and comprehensive to include individuals as diverse as Queen Victoria, Charles Darwin, Matthew Arnold, and Oscar Wilde.

. . . . .

. . . . .

. . . . .

. . . . .

Extrapolating Protagoras’ Man-the-Measure Doctrine

17 March 2018

Saturday

In the spirit of my Extrapolating Plato’s Definition of Being, in which I took a short passage from Plato and extrapolated it beyond its originally intended scope, I would like to take a famous line from Protagoras and also extrapolate this beyond its originally intended scope. The passage from Protagoras I have in mind is his most famous bon mot:

“Man is the measure of all things, of the things that are, that they are, and of the things that are not, that they are not.”

…and in the original Greek…

“πάντων χρημάτων μέτρον ἔστὶν ἄνθρωπος, τῶν δὲ μὲν οντῶν ὡς ἔστιν, τῶν δὲ οὐκ ὄντων ὠς οὐκ ἔστιν”

Presocratic scholarship has focused on the relativism of Protagoras’ μέτρον, especially in comparison to the strong realism of Plato, but I don’t take the two to be mutually exclusive. On the contrary, I think we can better understand Plato through Protagoras and Protagoras through Plato.

Firstly, the Protagorean dictum reveals at once both the inherent naturalism of Greek philosophy, which is the spirit that continues to motivate the western philosophical tradition (which Bertrand Russell once commented is all, essentially, Greek philosophy), and the ontologizing nature of Greek thought, which is another persistent theme of western philosophy, though less often noticed than the naturalistic theme. Plato, despite his otherworldly realism, is part of this inherent naturalism of Greek philosophy, which in our own day has become explicitly naturalistic. Indeed, Greek philosophy since ancient Greece might be characterized as the convergence upon a fully naturalistic conception of the world, though this has been a long and bumpy road.

The naturalism of Greek thought, in turn, points to the proto-scientific character of Greek philosophy. The closest approximation to modern scientific thought prior to the scientific revolution is to be found in works such as Archimedes’ Statics and Eratosthenes of Cyrene’s estimate of the diameter of the earth. If these examples are not already fully scientific inquiries, they are at least proto-science, from which a fully scientific method might have emerged under different historical conditions.

Plato and Protagoras were both guilty of a certain degree of mysticism, but strong traces of the scientific naturalism of Greek thought is expressed in their work. Protagoras’ μέτρον in particular can be understood as an early step in the direction of quantificational concepts. Quantification is central to scientific thought (in my podcast The Cosmic Archipelago, Part II, I offered a variation on the familiar Cartesian theme of cogito, ergo sum, suggesting that, from the perspective of science, we could say I measure, therefore I am), and when we think of quantification we think of measurement in the sense of gradations on a standard scale. However, the most fundamental form of quantification is revealed by counting, and counting is essentially the determination whether something exists or not. Thus the Protagorean μέτρον — specifically, the things that are, that they are, and the things that are not, that they are not — is a quantificational schema for determining existence relative to a human observer. Protagoras’ μέτρον is a postulate of counting, and without counting there would be no mathematicized natural science.

All scientific knowledge as we know it is human scientific knowledge, and all of it is therefore anthropocentric in a way that is not necessarily a distortion. For human beings to have knowledge of the world in which they find themselves, they must have knowledge that the human mind can assimilate. Our epistemic concepts are the framework we have erected in order to make sense of the world, and these concepts are human creations. That does not mean that they are wrong, even if they have been frequently misleading. The pyrrhonian skeptic exploits this human, all-too-human weakness in our knowledge, claiming that because our concepts are imperfect, no knowledge whatsoever is possible. This is a strawman argument. Knowledge is possible, but it is human knowledge. Protagoras made this explicit. (This is one of the themes of my Cosmic Archipelago series.)

Taking Plato and Protagoras together — that is, taking Plato’s definition of being and Protagoras’ doctrine of measure — we probably come closer to the originally intended meaning of both Plato and Protagoras than if we treat them in isolation, a fortiori if we treat them as antagonists. Plato’s definition of being — the power to affect or be affected — and Protagoras’ dictum — that man is the measure of all things, which we can take to mean that quantification begins with a human observer — naturally coincide when the power to affect or be affected is understood relative to the human power to affect or be affected.

Since human knowledge begins with a human observer and human experience, knowledge necessarily also follows from that which affects a human being or that which a human being can effect. The role of experimentation in science since the scientific revolution takes this ontological interaction of affecting and being affected, makes it systematic, and derives all natural knowledge from this principle. Human beings formulate scientific experiments, and in so doing affect the world in building an experimental apparatus and running the experiment. The experiment, in turn, affects human beings as the scientist observes the experiment running and records how it affects him, i.e., what he observers in the world as a result of his intervention in the course of events.

Plato and Protagoras taken together as establishing an initial ontological basis for quantification lay the metaphysical groundwork for scientific naturalism, even if neither philosopher was a scientific naturalist in the strict sense.

. . . . .

I have previously discussed Protagoras’ μέτρον in Ontological Ruminations: Six Protagorean Propositions on the Nature of Man and the World and A Non-Constructive World.

. . . . .

. . . . .

. . . . .

. . . . .

The Historiography of Big History

16 February 2018

Friday

In Rational Reconstructions of Time I described a series of intellectual developments in historiography in which big history appeared in the penultimate position as a recent historiographical innovation. There is another sense, however, in which there have always been big histories — that is to say, histories that take us from the origins of our world through the present and into the future — and we can identify a big history that represents many of the major stages through which western thought has passed. In what follows I will focus on western history, in so far as any regional focus is relevant, as “history” is a peculiarly western idea, originating in classical antiquity among the Greeks, and with its later innovations all emerging from western thought.

Shortly after Christianity emerged, a Christian big history was formulated across many works by many different authors, but I will focus on Saint Augustine’s City of God. Christianity takes up the mythological material of the earlier seriation of western civilization and codifies it in the light of the new faith. Augustine presented an over-arching vision of human history that corresponded to the salvation history of humanity according to Christian thought. Some scholars have argued that western Christianity is distinctive in its insistence upon the historicity of its salvation history. If this is true, then Augustine’s City of God is Exhibit “A” in the development of this idea, tracing the dual histories of the City of God and the City of Man, each of which punctuates the other in actual moments of historical time when the two worlds are inseparable for all their differences. Here, the world behind the world is always vividly present, and in a Platonic way (for Augustine was a Christian Platonist) was more real than the world we take for the real world.

The Christian vision of history we find in Saint Augustine passed through many modifications but in its essentials remained largely intact until the Enlightenment, when the combined force of the scientific revolution and political turmoil began to dissolve the institutional structures of agricultural civilization. Here we have the remarkable work of Kant, better known for his three critiques, but who also wrote his Universal Natural History and Theory of the Heavens. The idea of a universal natural history extends the idea of natural history to the whole of the cosmos, and to human endeavor as well, and more or less coincides with the contemporary conception of big history, at least in so far as the scope and character of big history is concerned. Kant deserves a place in intellectual history for this if for nothing else. In other words, despite his idealist philosophy (formulated decades after his Universal Natural History), Kant laid the foundations of a naturalistic historiography for the whole of natural history. Since then, we have only been filling in the blanks.

Marie Jean Antoine Nicolas de Caritat, marquis de Condorcet, author of Sketch for a Historical Picture of the Progress of the Human Spirit

The Marquis de Condorcet took this naturalistic conception of universal history and interpreted it within the philosophical context of the Encyclopédistes and the French Philosophes (being far more empiricist and materialist than Kant), in writing his Esquisse d’un tableau historique des progrès de l’esprit humain (Sketch for a Historical Picture of the Progress of the Human Mind), in ten books, the tenth book of which explicitly concerns itself with the future progress of the human mind. I may be wrong about this, but I believe this to be the first sustained effort at historiographical futurism in western thought. And Condorcet wrote this work while on the run from French revolutionary forces, having been branded a traitor by the revolution he had served. That Condorcet wrote his big history of progress and optimism while hiding from the law is a remarkable testimony to both the man and the idea to which he bore witness.

After the rationalism of the Enlightenment, European intellectual history took a sharp turn in another direction, and it was romanticism that was the order of the day. Kant’s younger contemporary, Johann Gottfried Herder, wrote his Ideen zur Philosophie der Geschichte der Menschheit (Ideas upon Philosophy and the History of Mankind, or Reflections on the Philosophy of History of Mankind, or any of the other translations of the title), as well as several essays on related themes (cf. the essays, “How Philosophy Can Become More Universal and Useful for the Benefit of the People” and “This Too a Philosophy of History for the Formation of Humanity”), at this time. In some ways, Herder’s romantic big history closely resembles the big histories of today, as he begins with what was known of the universe — the best science of the time, as it were — though he continues on in a way to justify regional nationalistic histories, which is in stark contrast to the big history of our time. We could learn from Herder on this point, if only we could be truly scientific in our objectivity and set aside the ideological conflicts that have arisen from nationalistic conceptions of history, which still today inform perspectives in historiography.

In a paragraph that I have previously quoted in Scientific Metaphysics and Big History there is a plan for a positivist big history as conceived by Otto Neurath:

“…we may look at all sciences as dovetailed to such a degree that we may regard them as parts of one science which deals with stars, Milky Ways, earth, plants, animals, human beings, forests, natural regions, tribes, and nations — in short, a comprehensive cosmic history would be the result of such an agglomeration… Cosmic history would, as far as we are using a Universal Jargon throughout all branches of research, contain the same statements as our unified science. The language of our Encyclopedia may, therefore, be regarded as a typical language of history. There is no conflict between physicalism and this program of cosmic history.”

Otto Neurath, Foundations of the Social Sciences, Chicago and London: The University of Chicago Press, 1970 (originally published 1944), p. 9

To my knowledge, no one wrote this positivist big history, but it could have been written, and perhaps it should have been written. I can imagine an ambitious but eccentric scholar completely immersing himself or herself in the intellectual milieu of early twentieth century logical positivism and logical empiricism, and eventually coming to write, ex post facto, the positivist big history imagined by Neurath but not at that time executed. One might think of such an effort as a truly Quixotic quest, or as the fulfillment of a tradition of writing big histories on the basis of current philosophical thought.

From this thought experiment in the ex post facto writing of a history not written in its own time we can make an additional leap. I have noted elsewhere (The Cosmic Archipelago, Part III: Reconstructing the History of the Observable Universe) that scientific historiography has reconstructed the histories of peoples who did not write their own histories. This could be done in a systematic way. An exhaustive scientific research program in historiography could take the form of writing the history of every time and place from the perspective of every other time and place. We would have the functional equivalent of this research program if we had a big history written from the perspective of every time and place for which a distinctive perspective can be identified, because each big history from each identifiable perspective would be a history of the world entire, and thus would subsume under it all regional and parochial histories.

I previously proposed an idea of a similarly exhaustive historiography of the kind that could only be written once the end was known. In my Who will read the Encyclopedia Galactica? I suggested that Freeman Dyson’s eternal intelligences could busy themselves as historiographers through the coming trillions of years when the civilizations of the Stelliferous Era are no more, and there can be no more civilizations of this kind because there are no longer planets being warmed by stellar insolation, hence no more civilizations of planetary endemism.

It is a commonplace of historiographical thought that each generation must write and re-write the past for its own purposes and from its own point of view. Gibbon’s Enlightenment history of the later Roman Empire is distinct in temperament and outlook from George Ostrogorsky’s History of the Byzantine State. While an advanced intelligence in the post-Stelliferous Era would want to bring its own perspective to the histories of the civilizations of the Stelliferous Era, it would also want a complete “internal” account of these civilizations, in the spirit of thought experiments in writing histories that could have or should have been written during particular periods, but which, for one reason or another, never were written. If we imagine eternal intelligences (at least while sufficient energy remains in the universe) capable of running detailed simulations of the past, this could be a source of the immersive scholarship that would make it possible to write the unwritten big histories of ages that produced a distinctive philosophical perspective, but which did not produce a historian (or the idea of a big history) that could execute the idea in historical form.

There is a sense in which these potentially vast unwritten histories, the unactualized rivals to Gibbon’s Decline and Fall of the Roman Empire, are like the great unbuilt buildings, conceived and sketched by architects, but for which there was neither the interest nor the wherewithal to build. I am thinking, above all, of Étienne-Louis Boullée’s Cenotaph for Isaac Newton, but I could just as well cite the unbuilt cities of Antonio Sant’Elia, the skyscraper designed by Antonio Gaudí, or Frank Lloyd Wright’s mile high skyscraper (cf. Planners and their Cities, in which I discuss other great unbuilt projects, such as Le Corbusier’s Voisin Plan for Paris and Wright’s Broadacre City). Just as I have here imagined unwritten histories eventually written, so too I have imagined these great unbuilt buildings someday built. Specifically, I have suggested that a future human civilization might retain its connection to the terrestrial past without duplicating the past by building structures proposed for Earth but never built on Earth.

History is an architecture of the past. We construct a history for ourselves, and then we inhabit it. If we don’t construct our own history, someone else will construct our history for us, and then we live in the intellectual equivalent of The Projects, trying to make a home for ourselves in someone else’s vision of our past. It is not likely that we will feel entirely comfortable within a past conceived by another who does not share our philosophical presuppositions.

From the perspective of big history, and from the perspective of what I call formal historiography, history is also an architecture of the future, which we inhabit with our hopes and fears and expectations and intentions of the future. And indeed we might think of big history as a particular kind of architecture — a bridge that we build between the past and the future. In this way, we can understand why and how most ages have written big histories for themselves out of the need to bridge past and future, between which the present is suspended.

. . . . .

. . . . .

Studies in Grand Historiography

2. Addendum on Big History as the Science of Time

3. The Epistemic Overview Effect

7. Big History and Historiography

8. Big History and Scientific Historiography

9. Philosophy for Industrial-Technological Civilization

10. Is it possible to specialize in the big picture?

11. Rational Reconstructions of Time

12. History in an Extended Sense

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

The Three Revolutions

12 November 2017

Sunday

Three Revolutions that Shaped the Modern World

The world as we know it today, civilization as we know it today (because, for us, civilization is the world, our world, the world we have constructed for ourselves), is the result of three revolutions. What was civilization like before these revolutions? Humanity began with the development of an agricultural or pastoral economy subsequently given ritual expression in a religious central project that defined independently emergent civilizations. Though widely scattered across the planet, these early agricultural civilizations had important features in common, with most of the pristine civilizations beginning to emerge shortly after the Holocene warming period of the current Quaternary glaciation.

Although independently originating, these early civilizations had much in common — arguably, each had more in common with the others emergent about the same time than they have in common with contemporary industrialized civilization. How, then, did this very different industrialized civilization emerge from its agricultural civilization precursors? This was the function of the three revolutions: to revolutionize the conceptual framework, the political framework, and the economic framework from its previous traditional form into a changed modern form.

The institutions bequeathed to us by our agricultural past (the era of exclusively biocentric civilization) were either utterly destroyed and replaced with de novo institutions, or traditional institutions were transformed beyond recognition to serve the needs of a changed human world. There are, of course, subtle survivals from the ten thousand years of agricultural civilization, and historians love to point out some of the quirky traditions we continue to follow, though they make no sense in a modern context. But this is peripheral to the bulk of contemporary civilization, which is organized by the institutions changed or created by the three revolutions.

The Scientific Revolution

The scientific revolution begins as the earliest of the three revolutions, in the early modern period, and more specifically with Copernicus in the sixteenth century. The work of Copernicus was elaborated and built upon by Kepler, Galileo, Huygens, and a growing number of scientists in western Europe, who began with physics, astronomy, and cosmology, but, in framing a scientific method applicable to the pursuit of knowledge in any field of inquiry, created an epistemic tool that would be universally applied.

The application of the scientific method had the de facto consequence of stigmatizing pre-modern knowledge as superstition, and the attitude emerged that it was necessary to extirpate the superstitions of the past in order to build anew on solid foundations of the new epistemic order of science. This was perceived as an attack on traditional institutions, especially traditional cultural and social institutions. It was this process of the clearing away of old knowledge, dismissed as irrational superstition, and replacing it with new scientific knowledge, that gave us the conflict between science and religion that still simmers in contemporary civilization.

The scientific revolution is ongoing, and continues to revolutionize our conceptual framework. For example, four hundred years into the scientific revolution, in the twentieth century, the Earth sciences were revolutionized by plate tectonics and geomorphology, while cosmology was revolutionized by general relativity and physics was revolutionized by quantum theory. The world we understood at the end of the twentieth century was a radically different place from the world we understood at the beginning of the twentieth century. This is due to the iterative character of the scientific method, which we can continue to apply not only to bodies of knowledge not yet transformed by the scientific method, but also to earlier bodies of scientific knowledge that, while revolutionary in their time, were not fully comprehensive in their conception and formulation. Einstein recognized this character of scientific thought when he wrote that, “There could be no fairer destiny for any physical theory than that it should point the way to a more comprehensive theory, in which it lives on as a limiting case.”

Democracy in its modern form dates from 1776 and is therefore a comparatively young historical institution.

The Political Revolutions

The political revolutions that began in the last quarter of the eighteenth century, beginning with the American Revolution in 1776, followed by the French Revolution in 1789, and then a series of revolutions across South America that displaced Spain and the Spanish Empire from the continent and the western hemisphere (in a kind of revolutionary contagion), ushered in an age of representative government and popular sovereignty that remains the dominant paradigm of political organization today. The consequences of these political revolutions have been raised to the status of a dogma, so that it no longer considered socially acceptable to propose forms of government not based upon representative institutions and popular sovereignty, however dismally or frequently these institutions disappoint.

We are all aware of the experiment with democracy in classical antiquity in Athens, and spread (sometimes by force) by the Delian League under Athenian leadership until the defeat of Athens by the Spartans and their allies. The ancient experiment with democracy ended with the Peloponnesian War, but there were quasi-democratic institutions throughout the history of western civilization that fell short of perfectly representative institutions, and which especially fell short of the ideal of popular sovereignty implemented as universal franchise. Aristotle, after the Peloponnesian War, had already converged on the idea of a mixed constitution (a constitution neither purely aristocratic nor purely democratic) and the Roman political system over time incorporated institutions of popular participation, such as the Tribune of the People (Tribunus plebis).

Medieval Europe, which Kenneth Clark once called a, “conveniently loose political organization,” frequently involved self-determination through the devolution of political institutions to local control, which meant that free cities might be run in an essentially democratic way, even if there were no elections in the contemporary sense. Also, medieval Europe dispensed with slavery, which had been nearly universal in the ancient world, and in so doing was responsible for one of the great moral revolutions of human civilization.

The political revolutions that broke over Europe and the Americas with such force starting in the late eighteenth century, then, had had the way prepared for them by literally thousands of years of western political philosophy, which frequently formulated social ideals long before there was any possibility of putting them into practice. Like the scientific revolution, the political revolutions had deep roots in history, so that we should rightly see them as the inflection points of processes long operating in history, but almost imperceptible in their earliest expression.

Early industrialization often had an incongruous if not surreal character, as in this painting of traditional houses silhouetted again the Madeley Wood Furnaces at Coalbrookdale.

The Industrial Revolution

The industrial revolution began in England with the invention of James Watt’s steam engine, which was, in turn, an improvement upon the Newcomen atmospheric engine, which in turn built upon a long history of an improving industrial technology and industrial infrastructure such as was recorded in Adam Smith’s famous example of a pin factory, and which might be traced back in time to the British Agricultural Revolution, if not before. The industrial revolution rapidly crossed the English channel and was as successful in transforming the continent as it had transformed England. The Germans especially understood that it was the scientific method as applied to industry that drove the industrial revolution forward, as it still does today. It is science rather than the steam engine that truly drove the industrial revolution.

As the scientific revolution drove epistemic reorganization and the political revolutions drove sociopolitical reorganization, the industrial revolution drove economic reorganization. Today, we are all living with the consequences of that reorganization, with more human beings than ever before (both in terms of absolute numbers and in terms of rates) living in cities, earning a living through employment (whether compensated by wages or salary is indifferent; the invariant today is that of being an employee), and organizing our personal time on the basis of clock times that have little to do with the sun and the moon, and schedules that have little or no relationship to the agricultural calendar.

The emergence of these institutions that facilitated the concentration of labor (what Marx would have called “industrial armies”) where it was most needed for economic development indirectly meant the dissolution of multi-generational households, the dissolution of the feeling of being rooted in a particular landscape, the dissolution of the feeling of belonging to a local community, and the dissolution of the way of life that was embodied in these local communities of multi-generational households, bound to the soil and the climate and the particular mix of cultivars that were dietary staples. As science dismissed traditional beliefs as superstition, the industrial revolution dismissed traditional ways of life as impractical and even as unhealthy. Le Courbusier, a great prophet of the industrial city, possessed of revolutionary zeal, forcefully rejected pre-modern technologies of living, asserting, “We must fight against the old-world house, which made a bad use of space. We must look upon the house as a machine for living in or as a tool.”

Revolutionary Permutations

Terrestrial civilization as we know it today is the product of these three revolutions, but must these three revolutions occur, and must they occur in this specific order, for any civilization whatever that would constitute a peer technological civilization with which we might hope to engage in communication? That is to say, if there are other civilizations in the universe (or even in a counterfactual alternative history for terrestrial civilization), would they have to arrive at radio telescopes and spacecraft by this same sequence of revolutions in the same order, or would some other sequence (or some other revolutions) be equally productive of technological civilizations?

This may well sound like a strange question, perhaps an arbitrary question, but this is the sort of question that formal historiography asks. In several posts I have started to outline a conception of formal historiography in which our interest is not only in what has happened on Earth, or what might yet happen on Earth, but what can happen with any civilization whatsoever, whether on Earth or elsewhere (cf. Big History and Scientific Historiography, History in an Extended Sense, Rational Reconstructions of Time, An Alternative Formulation of Rational Reconstructions of Time, and Placeholders for Null-Valued Time). While this conception is not formulated for the express purpose of investigating questions like the Fermi paradox, I hope that the reader can see how such an investigation bears upon the Fermi paradox, the Drake equation, and other “big picture” conceptions that force us to think not in terms of terrestrial civilization, but rather in terms of any civilization whatever.

From a purely formal conception of social institutions, it could be argued that something like these revolutions would have to take place in something like the terrestrial order. The epistemic reorganization of society made it possible to think scientifically about politics, and thus to examine traditional political institutions rationally in a spirit of inquiry characteristic of the Enlightenment. Even if these early forays into political science fall short of contemporary standards of rigor in political science, traditional ideas like the divine right of kings appeared transparently as little better than political superstitions and were dismissed as such. The social reorganization following from the rational examination the political institutions utterly transformed the context in which industrial innovations occurred. If the steam engine or the power loom had been introduced in a time of rigid feudal institutions, no one would have known what to do with them. Consumer goods were not a function of production or general prosperity (as today), but rather were controlled by sumptuary laws, much as the right to engage in certain forms of commerce was granted as a royal favor. These feudal political institutions would not likely have presided over an industrial revolution, but once these institutions were either reformed or eliminated, the seeds of the industrial revolution could take root.

In this interpretation, the epistemic reorganization of the scientific revolution, the social reorganization of the political revolutions, and the economic reorganization of the industrial revolution are all tightly-coupled both synchronically (in terms of the structure of society) and diachronically (in terms of the historical succession of this sequence of events). I am, however, suspicious of this argument because of its implicit anthropocentrism as well as its teleological character. Rather than seeking to justify or to confirm the world we know, framing the historical problem in this formal way gives us a method for seeking variations on the theme of civilization as we know it; alternative sequences could be the basis of thought experiments that would point to different kinds of civilization. Even if we don’t insist that this sequence of revolutions is necessary in order to develop a technological civilization, we can see how each development fed into subsequent developments, acting as a social equivalent of directional selection. If the sequence were different, presumably the directional selection would be different, and the development of civilization taken in a different direction.

I will not here attempt a detailed analysis of the permutations of sequences laid out in the graphic above, though the reader may wish to think through some of the implications of civilizations differently structured by different revolutions at different times in their respective development. For example, many science fiction stories imagine technological civilizations with feudal institutions, whether these feudal institutions are retained unchanged from a distant agricultural past, or whether they were restored after some kind of political revolution analogous to those of terrestrial history, so one could say that, prima facie, political revolution might be entirely left out, i.e., that political reorganization is dispensable in the development of technological civilization. I would not myself make this argument, but I can see that the argument can be made. Such arguments could be the basis of thought experiments that would present civilization-as-we-do-not-know-it, but which nevertheless inhabit the same parameter space of civilization-as-we-know-it.

. . . . .

. . . . .

. . . . .

. . . . .

Why Freedom of Inquiry in Academia Matters to an Autodidact

11 November 2016

Friday

When I attempt to look back on my personal history in a spirit of dispassionate scientific inquiry, I find that I readily abandon entire regions of my past in my perhaps unseemly hurry to develop the next idea that I have, and which I am excited to see where it leads me. Moreover, contemplating one’s personal history can be a painful and discomfiting experience, so that, in addition to the headlong rush into the future, there is the desire to dissociate oneself from past mistakes, even when these past mistakes were provisional positions, known at the time to be provisional, but which were nevertheless necessary steps in order to begin (as well as to continue) the journey of self-discovery, which is at the same time a journey of discovering the world and of one’s place in the world.

In my limited attempts to grasp my personal history as an essential constituent of my present identity, among all the abandoned positions of my past I find that I understood two important truths about myself early in life (i.e., in my teenage years), even if I did not formulate them explicitly, but only acted intuitively upon things that I immediately understood in my heart-of-hearts. One of these things is that I have never been, am not now, and never will be either of the left or of the right. The other thing is, despite having been told many times that I should have pursued higher education, and despite the fact that most individuals who have the interests that I have are in academia, that I am not cut out for academia, whether temperamentally, psychologically, or socially — notwithstanding the fact that, of necessity, I have had to engage in alienated labor in order to support myself, whereas if I had pursued in a career in academia, I might have earned a living by dint of my intellectual efforts.

The autodidact is a man with few if any friends (I could tell you a few stories about this, but I will desist at present). The non-partisan, much less the anti-partisan, is a man with even fewer friends. Adults (unlike childhood friends) tend to segregate along sectional lines, as in agrarian-ecclesiastical civilization we once segregated ourselves even more rigorously along sectarian lines. If you do not declare yourself, you will find yourself outside every ideologically defined circle of friends. And I am not claiming to be in the middle; I am not claiming to strike a compromise between left and right; I am not claiming that I have transcended left and right; I am not claiming that I am a moderate. I claim only that I belong to no doctrinaire ideology.

It has been my experience that, even if you explicitly and carefully preface your remarks with a disavowal of any political party or established ideological position, if you give voice to a view that one side takes to be representative of the other side, they will immediately take your disavowal of ideology to be a mere ruse, and perhaps a tactic in order to gain a hearing for an unacknowledged ideology. The partisans will say, with a knowing smugness, that anyone who claims not to be partisan is really a partisan on the other side — and both sides, left and right alike, will say this. One then finds oneself in overlapping fields of fire. This experience has only served to strengthen my non-political view of the world; I have not reacted against my isolation by seeking to fall into the arms of one side or the other.

This non-political perspective — which I am well aware would be characterized as ideological by others — that eschews any party membership or doctrinaire ideology, now coincides with my sense of great retrospective relief that I did not attempt an academic career path. I have watched with horrified fascination as academia has eviscerated itself in recent years. I have thanked my lucky stars, but most of all I have thanked my younger self for having understood that academia was not for me and for not having taken this path. If I had taken this path, I would be myself subject to the politicization of the academy that in some schools means compulsory political education, increasingly rigid policing of language, and an institution more and more making itself over into the antithesis of the ideal pursuit of knowledge and truth.

But the university is a central institution of western civilization; it is the intellectual infrastructure of western civilization. I can affirm this even as an autodidact who has never matriculated in the university system. I have come to understand, especially in recent years, how it is the western way to grasp the world by way of an analytical frame of mind. The most alien, the most foreign, the most inscrutable otherness can be objectively and dispassionately approached by the methods of scientific inquiry that originated in western civilization. This character of western thought is far older than the scientific revolution, and almost certainly has its origins in the distinctive contribution of the ancient Greeks. As soon as medieval European civilization began to stabilize, the institution of the university emerged as a distinctive form of social organization that continues to this day. Since I value western civilization and its scientific tradition, I must also value the universities that have been the custodians of this tradition. It could even be said that the autodidact is parasitic upon the universities that he spurns: I read the books of academics; I benefit from the scientific research carried on at universities; my life and my thought would not have been possible except for the work that goes on in universities.

It is often said of the Abrahamic religions that they all pray to the same God. So too all who devote their lives to the pursuit of truth pay their respects to the same ancestors: academicians and their institutions look back to Plato’s Academy and Aristotle’s Lyceum, just as do I. We have the same intellectual ancestors, read the same books, and look to the same ideals, even if we approach those ideals differently. In the same way that I am a part of Christian civilization without being a Christian, in an expansive sense I am a part of the intellectual tradition of western civilization represented by its universities, even though I am not of the university system.

As an autodidact, I could easily abandon the western world, move to any place in the world where I was able to support myself, and immerse myself in another tradition, but western civilization means something to me, and that includes the universities of which I have never been a part, just as much as it includes the political institutions of which I have never been a part. I want to know that these sectors of society are functioning in a manner that is consistent with the ideals and aspirations of western civilization, even if I am not part of these institutions.

There are as many autodidacticisms as there are autodidacts; the undertaking is an essentially individual and indeed solitary one, even an individualistic one, hence also essentially an isolated undertaking. Up until recently, in the isolation of my middle age, I had questioned my avoidance of academia. Now I no longer question this decision of my younger self, but am, rather, grateful that this is something I understood early in my life. But that does not exempt me from an interest in the fate of academia.

All of this is preface to a conflict that is unfolding in Canada that may call the fate of the academy into question. Elements at the The University of Toronto have found themselves in conflict with a professor at the school, Jordan B. Peterson. Prior to this conflict I was not familiar with Peterson’s work, but I have been watching his lectures available on Youtube, and I have become an unabashed admirer of Professor Peterson. He has transcended the disciplinary silos of the contemporary university and brings together an integrated approach to the western intellectual tradition.

Both Professor Peterson and his most vociferous critics are products of the contemporary university. The best that the university system can produce now finds itself in open conflict with the worst that the university system can produce. Moreover, the institutional university — by which I mean those who control the institutions and who make its policy decisions — has chosen to side with the worst rather than with the best. Professor Peterson noted in a recent update of his situation that the University of Toronto could have chosen to defend his free speech rights, and could have taken this battle to the Canadian supreme court if necessary, but instead the university chose to back those who would silence him. Thus even if the University of Toronto relents in its attempts to reign in the freedom of expression of its staff, it has already revealed what side it is on.

There are others fighting the good fight from within the institutions that have, in effect, abandoned them and have turned against them. For example, Heterodox Academy seeks to raise awareness of the lack of the diversity of viewpoints in contemporary academia. Ranged against those defending the tradition of western scholarship are those who have set themselves up as revolutionaries engaged in the long march through the institutions, and every department that takes a particular pride in training activists rather than scholars, placing indoctrination before education and inquiry.

If freedom of inquiry is driven out of the universities, it will not survive in the rest of western society. When Justinian closed the philosophical schools of Athens in 529 AD (cf. Emperor Justinian’s Closure of the School of Athens) the western intellectual tradition was already on life support, and Justinian merely pulled the plug. It was almost a thousand years before the scientific spirit revived in western civilization. I would not want to see this happen again. And, make no mistake, it can happen again. Every effort to shout down, intimidate, and marginalize scholarship that is deemed to be dangerous, politically unacceptable, or offensive to some interest group, is a step in this direction.

To employ a contemporary idiom, I have no skin in the game when it comes to universities. It may be, then, that it is presumptuous for me to say anything. Mostly I have kept my silence, because it is not my fight. I am not of academia. I do not enjoy its benefits and opportunities, and I am not subject to its disruptions and disappointments. But I must be explicit in calling out the threat to freedom of inquiry. Mine is but a lone voice in the wilderness. I possess no wealth, fame, or influence that I can exercise on behalf of freedom of inquiry within academia. Nevertheless, I add my powerless voice to those who have already spoken out against the attempt to silence Professor Peterson.

. . . . .

. . . . .

. . . . .

. . . . .

. . . . .

Origins of Globalization

20 December 2015

Sunday

The politics of a word

It is unfortunate to have to use the word “globalization,” as it is a word that rapidly came into vogue and then passed out of vogue with equal rapidity, and as it passed out of vogue it had become spattered with a great many unpleasant associations. I had already noted this shift in meaning in my book Political Economy of Globalization.

In the earliest uses, “globalization” had a positive connotation; while “globalization” could be used in an entirely objective economic sense as a description of the planetary integration of industrialized economies, this idea almost always was delivered with a kind of boosterism. One cannot be surprised that the public rapidly tired of hearing about globalization, and it was perhaps the sub-prime mortgage crisis that delivered the coup de grâce.

In much recent use, “globalization” has taken on a negative connotation, with global trade integration and the sociopolitical disruption that this often causes blamed for every ill on the planet. Eventually the hysterical condemnation of globalization will go the way of boosterism, and future generations will wonder what everyone was talking about at the end of the twentieth century and the beginning of the twenty-first century. But in the meantime the world will have been changed, and these future generations will not care about globalization only because process converged on its natural end.

Despite this history of unhelpful connotations, I must use the word, however, because if I did not use it, the relevance of what I am saying would probably be lost. Globalization is important, even if the word has been used in misleading ways; globalization is a civilizational-level transformation that leaves nothing untouched, because at culmination of the process of globalization lies a new kind of civilization, planetary civilization.

I suspect that the reaction to “planetary civilization” would be very different from the reactions evoked by “globalization,” though the two are related as process to outcome. Globalization is the process whereby parochial, geographically isolated civilizations are integrated into a single planetary civilization. The integration of planetary civilization is being consolidated in our time, but it has its origins about five hundred years ago, when two crucial events began the integration of our planet: the Copernican Revolution and the Columbian exchange.

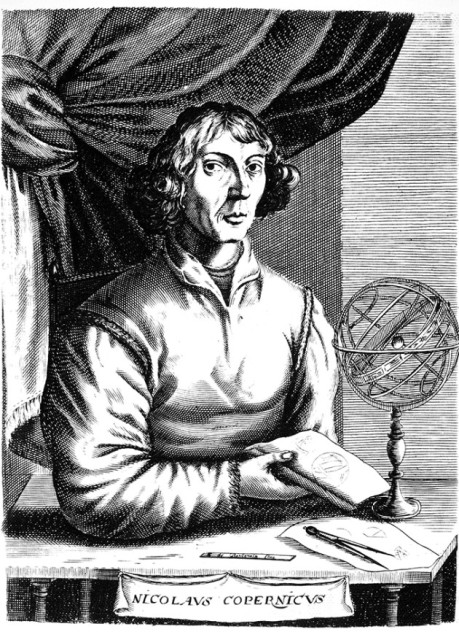

Copernicus continues to shape not only how we see the universe, but also our understanding of our place within it.

The Copernican Revolution

The intellectual basis of of our world as a world, i.e., as a planet, and as one planet among other planets in a planetary system, is the result of the Copernican revolution. The Copernican revolution forces us to acknowledge that the Earth is one planet among planets. The principle has been extrapolated so that we eventually also acknowledged that the sun is one star among stars, our galaxy is one galaxy among galaxies, and eventually we will have to accept that the universe is but one universe among universes, though at the present level of the development of science the universe defines the limit of knowledge because it represents the possible limits of observation. When we will eventually transcend this limit, it will be due not to abandoning empirical evidence as the basis of science, but by extending empirical evidence beyond the limits observed today.

As one planet among many planets, the Earth loses its special status of being central in the universe, only to regain its special status as the domicile of an organism that can uniquely understand its status in the universe, overcoming the native egoism of any biological organism that survives first and asks questions later. Life that begins merely as self-replication and eventually adds capacities until it can feel and eventually reason is probably rare in the universe. The unique moral qualities of a being derived from such antecedents but able to transcend the exigencies of the moment is the moral legacy of the Copernican Revolution.

As the beginning of the Scientific Revolution, the Copernican Revolution is also part of a larger movement that would ultimately become the basis of a new civilization. Industrial-technological civilization is a species of scientific civilization; it is science that provides the intellectual infrastructure that ties together scientific civilization. Science is uniquely suited to its unifying role, as it constitutes the antithesis of the various ethnocentrisms that frequently define pre-modern forms of civilization, which thereby exclude even as they expand imperially.

Civilzation unified sub specie scientia is unified in a way that no ethnic, national, or religious community can be organized. Science is exempt from the Weberian process of defining group identity through social deviance, though this not well understood, and because not well understood, often misrepresented. The exclusion of non-science from the scope of science is often assimilated to Weberian social deviance, though it is something else entirely. Science is selective on the basis of empirical evidence, not social convention. While social convention is endlessly malleable, empirical evidence is unforgiving in the demarcation it makes between what falls within the scope of the confirmable or disconfirmable, and what falls outside this scope. Copernicus began the process of bringing the world entire within this scope, and in so doing changed our conception of the world.

The Columbian Exchange

While the Copernican Revolution provided the intellectual basis of the unification of the world as a planetary civilization, the Columbian Exchange provided the material and economic basis of the unification of the world as a planetary civilization. In the wake of the voyages of discovery of Columbus and Magellan, and many others that followed, the transatlantic trade immediately began to exchange goods between the Old World and the New World, which had been geographically isolated. The biological consequences of this exchange were profound, which meant that the impact on biocentric civilization was transformative.

We know the story of what happened — even if we do not know this story in detail — because it is the story that gave us the world that we know today. Human beings, plants, and animals crossed the Atlantic Ocean and changed the ways of life of people everywhere. New products like chocolate and tobacco became cash crops for export to Europe; old products like sugar cane thrived in the Caribbean Basin; invasive species moved in; indigenous species were pushed out or become extinct. Maize and potatoes rapidly spread to the Old World and became staple crops on every inhabited continent.

There is little in the economy of the world today that does not have its origins in the Columbian exchange, or was not prefigured in the Columbian exchange. Prior to the Columbian exchange, long distance trade was a trickle of luxuries that occurred between peoples who never met each other at the distant ends of a chain of middlemen that spanned the Eurasian continent. The world we know today, of enormous ships moving countless shipping containers around the world like so many chess pieces on a board, has its origins in the Age of Discovery and the great voyages that connected each part of the world to every other part.

Defining planetary civilization

In my presentation “What kind of civilizations build starships?” (at the 2015 Starship Congress) I proposed that civilizations could be defined (and given a binomial nomenclature) by employing the Marxian distinction between intellectual superstructure and economic infrastructure. This is why I refer to civilizations in hyphenated form, like industrial-technological civilization or agrarian-ecclesiastical civilization. The first term gives the economic infrastructure (what Marx also called the “base”) while the second term gives the intellectual superstructure (which Marx called the ideological superstructure).

In accord with this approach to specifying a civilization, the planetary civilization bequeathed to us by globalization may be defined in terms of its intellectual superstructure by the Copernican revolution and in terms of its economic infrastructure by the Columbian exchange. Thus terrestrial planetary civilization might be called Columbian-Copernican civilization (though I don’t intend to employ this name as it is not an attractive coinage).

Planetary civilization is the civilization that emerges when geographically isolated civilizations grow until all civilizations are contiguous with some other civilization or civiliations. It is interesting to note that this is the opposite of the idea of allopatric speciation; biological evolution cannot function in reverse in this way, reintegrating that which has branched off, but the evolution of mind and civilization can bring back together divergent branches of cultural evolution into a new synthesis.

Not the planetary civilization we expected

While the reader is likely to have a different reaction to “planetary civilization” than to “globalization,” both are likely to be misunderstood, though misunderstood in different ways and for different reasons. Discussing “planetary civilization” is likely to evoke utopian visions of our Earth not only intellectually and economically unified, but also morally and politically unified. The world today is in fact unified economically and, somewhat less so, intellectually (in industrialized economies science has become the universal means of communication, and mathematics is the universal language of science), but unification of the planet by trade and commerce has not led to political and moral unification. This is not the planetary civilization once imagined by futurists, and, like most futurisms, once the future arrives we do not recognize it for what it is.

There is a contradiction in the contemporary critique of globalization that abhors cultural homogenization on the one hand, while on the other hand bemoans the ongoing influence of ethnic, national, and religious regimes that stand in the way of the moral and political unification of humankind. It is not possible to have both. In so far as the utopian ideal of planetary civilization aims at the moral and political unification of the planet, it would by definition result in a cultural homogenization of the world far more destructive of traditional cultures than anything seen so far in human civilization. And in so far as the fait accompli of scientific and commercial unification of planetary civilization fails to develop into moral and political unification, it preserves cultural heterogeneity.

Incomplete globalization, incomplete planetary civilization

The process of globalization is not yet complete. China is nearing the status of a fully industrialized economy, and India is making the same transition, albeit more slowly and by another path. The beginnings of the industrialization of Africa are to be seen, but this process will not be completed for at least a hundred years, and maybe it will require two hundred years.